On a normal day, everything looks fine: the website is fast, APIs are calm, and monitoring dashboards stay in comfortable ranges.

Then a big newsletter goes out, a paid campaign performs better than expected, or Black Week (Black Friday and Cyber Monday) kicks off. Suddenly traffic spikes and you quickly see whether your infrastructure is well designed — or whether every peak turns into a late-night incident.

For many projects (eCommerce, SaaS, online banking, media, gaming, etc.), traffic peaks are not an anomaly; they are part of the business model. And when we talk about mission-critical environments, with geo-redundant high availability and network storage with synchronous replication, the margin for error is very small.

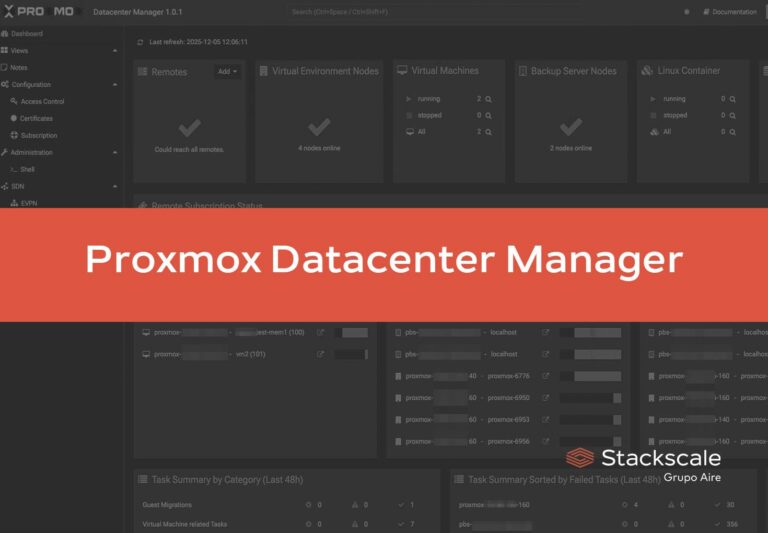

At Stackscale, where we work every day with private cloud based on Proxmox or VMware, bare-metal servers and network storage solutions for exactly these scenarios, the conclusion is quite clear:

it’s not just about “adding more machines”, it’s about designing the architecture so that these peaks are part of the plan.

1. First things first: really understand your traffic patterns

Without data, everything else is just intuition. The first step is having a clear picture of how your platform behaves.

Questions you should be able to answer without breaking a sweat:

- What does your “normal” traffic look like per hour and per day of the week?

- What have your real peaks been over the last 6–12 months, and what triggered them?

- Which parts of the platform suffer first under stress?

- Checkout, login, APIs, internal searches, private area, etc.

- At what point do errors, high response times or conversion drops start to appear?

To get there, the minimum reasonable approach is to combine:

- Application metrics: response times per endpoint, error ratios, job queues.

- Infrastructure metrics: CPU, RAM, disk I/O, network latency, storage usage.

- Business data: abandoned carts, forms that never complete, support tickets during campaigns.

With that base you can start talking about capacity in a meaningful way:

“we want to handle 3× last November’s peak” is very different from

“we need something more powerful because the site feels slow”.

2. Optimize before you scale: common bottlenecks

The most common reaction when a site goes down during a traffic spike is to blame the hosting. And sometimes that’s fair… but very often it’s not the whole story.

In practice, we usually see a combination of:

Heavy frontend

- Huge uncompressed images or no use of modern formats.

- Too many third-party scripts: tags, pixels, chats, A/B testing tools, etc.

- Themes or frontends overloaded with JavaScript that block rendering.

The more “expensive” each visit is in terms of resources, the faster your infrastructure saturates — no matter how good your bare-metal servers or private cloud are underneath.

Backend under pressure

- Database queries without proper indexes, or logic that is simply too complex.

- Overuse of plugins, modules or extensions that add logic to every request.

- Lack of caching (page, fragment or object cache) that forces recalculation on each request.

- Tasks that should go to a queue (bulk email sending, report generation, external integrations) running in real time.

Infrastructure at the limit

Even with good code, there are physical limits:

- CPU and RAM permanently high during peak hours.

- Storage systems or arrays saturated in terms of IOPS.

- Virtual machines oversized for secondary services and undersized for the critical path.

Practical rule of thumb:

before increasing resources at Stackscale (or anywhere else), it’s highly recommended to:

- Clean up frontend and media.

- Review database and cache usage.

- Remove unnecessary complexity from the backend.

Every improvement in this layer means the same hardware can handle more traffic, and every euro invested in infrastructure delivers better returns.

3. From a single server to a peak-ready architecture

Many projects start on a single server (physical or virtual) acting as a “Swiss army knife”: web, database, cache, cron jobs, queues… all in one place.

It works — until it doesn’t.

Once the business depends on that platform or traffic grows, priorities shift to:

- Reducing single points of failure.

- Separating responsibilities.

- Being able to add capacity without breaking everything.

Typical architectures we see in critical environments

A very common pattern in private cloud or bare-metal deployments:

- Several application nodes behind one or more load balancers.

- A separate database, with the option of read replicas when volume requires it.

- A cache/intermediate storage layer (for example Redis, Varnish cache, other cache proxies, etc.).

- Centralised, redundant network storage, simplifying high availability setups.

At Stackscale, many customers choose this type of architecture from the start or grow into it in stages, especially when:

- They cannot afford prolonged downtime windows.

- Traffic growth is structural, not just occasional.

- There are demanding requirements in terms of compliance, audits or internal SLAs.

4. Geo-redundant high availability and synchronous replication: taking “plan B” seriously

When we talk about mission-critical workloads, it’s not enough that one node can replace another inside the same data center. You also have to consider:

- Full data center failures.

- Network issues at carrier level.

- Serious incidents at region/city level.

This is where concepts such as:

- Geo-redundancy come in: having resources ready in a secondary data center that can take over if the primary fails.

- Synchronous storage replication between data centers: writes are only confirmed once they have been stored on both sides, minimizing the risk of data loss in case of a failure.

This kind of architecture makes sense when:

- The acceptable RPO is practically 0 (you cannot lose transactions).

- The expected RTO is very low (recovery in minutes).

- The impact of a prolonged outage clearly outweighs the additional cost of geo-redundancy.

In this context, a provider like Stackscale brings:

- Redundantly interconnected data centers.

- Network storage solutions designed for synchronous replication.

- Technical teams used to sizing and operating this kind of environment.

5. Capacity planning: enough headroom without paying for over-engineering all year long

The practical question is: how much headroom do I really need?

You don’t want an infrastructure that is permanently oversized, nor do you want to run “in the red” all the time:

- Start from real peaks, not gut feelings.

- Put the actual graphs of your strongest days on the table.

- Define a reasonable target:

- For example, handle 3× your current peak with acceptable response times.

- Translate that into infrastructure:

- How many application nodes?

- What size and type of bare-metal servers or private cloud resources?

- What minimum performance do you need from network storage?

- Design growth steps with your provider:

- Increase CPU/RAM for VMs without stopping the service.

- Add nodes to the application cluster.

- Increase capacity and performance of network storage and the inter-DC network.

Here the advantage of a hosted private cloud versus on-premise is obvious: you can grow step by step, with more predictable costs and without over-provisioning your own hardware “just in case”.

6. Dress rehearsal: test the limit before your next campaign

You don’t want to discover your limits on the day of your biggest campaign of the year. The sensible approach is to run a dress rehearsal in advance.

Minimum test elements:

- Load tests on critical paths (homepage, search, listing pages, product pages, login, checkout, APIs).

- Fine-grained monitoring during tests:

- Response times.

- CPU/RAM usage.

- I/O latency and saturation.

- Application errors.

- Failure simulations:

- Remove one application node from the load balancer and see how the system reacts.

- Test how the platform behaves with partial storage or network failures (where the environment allows, always in coordination with the infrastructure team).

The outcome should be a concrete list of:

- Configuration parameters to adjust.

- Resources to increase (or rebalance).

- Internal procedures for campaign day (what to watch, how often, who decides what).

7. “Good” peaks vs “bad” peaks: campaigns vs DDoS

Not every traffic spike is good news. On top of successful campaigns, you also have:

- DDoS attacks at network or layer-7 (HTTP) level.

- Bots crawling or attacking forms.

- Automated traffic trying to exploit vulnerabilities.

To avoid mixing everything together, you need:

- Visibility: the ability to detect anomalous behaviour by IP pattern, user-agent, country, path, etc.

- Mitigation measures:

- WAF with specific rules.

- Rate limiting on sensitive endpoints.

- Upstream DDoS protection and/or use of a CDN where it makes sense.

- Response procedures:

- What to do if traffic suddenly explodes and it’s not linked to a planned campaign.

- How to coordinate with your infrastructure provider to filter at network level before the attack reaches your nodes.

A solid architecture helps, but the security layer and procedures are just as important to ensure good peaks add value and bad peaks don’t take anything down.

Conclusion

Traffic peaks are not going away; in fact, they are becoming more frequent and more abrupt.

The difference lies in whether your infrastructure:

- Suffers them as an unpredictable problem, or

- Absorbs them as something already accounted for in the design.

With private cloud, bare-metal servers, network storage and synchronous replication between data centers, and an architecture built for geo-redundant high availability, you can move from “surviving as best you can” to planning growth intelligently.

If you’re reviewing your mission-critical platform or preparing your next major campaign, this is a good time to sit down with the Stackscale team, look at real data and define together what you need so that the next traffic peak is good news — not the start of an incident.

FAQ on traffic peaks and infrastructure architecture

1. What’s the difference between vertical and horizontal scaling to handle traffic peaks?

Vertical scaling means giving more resources to a single machine (more CPU, RAM, etc.). It’s simple and works well up to a point, but it has a physical limit and it remains a single point of failure.

Horizontal scaling means distributing the load across multiple application nodes behind one or more load balancers. It requires more architectural work, but offers better resilience and more room to grow, especially in mission-critical, high-availability environments.

2. What role does a private cloud provider like Stackscale play compared to an on-premise solution?

On-premise, you handle everything: hardware purchase, installation, maintenance, spare parts, growth planning, etc.

With hosted private cloud and bare-metal servers at Stackscale, you delegate the physical and data center layers (power, networking, hardware, replacements, etc.) and focus on the system and application layers. On top of that, you get geo-redundancy options between data centers and network storage prepared for synchronous replication, which is much harder to implement efficiently in a single on-premise data center.

3. How do I know if I really need geo-redundancy and synchronous replication, or if a single data center is enough?

It depends on your RPO/RTO and the real cost of downtime. If your business can afford to lose a few minutes of data and be offline for a while while the primary data center recovers, a solid high-availability setup within one DC might be enough.

If, on the other hand, every minute of downtime has a serious impact (economic, regulatory or reputational) and you cannot afford to lose transactions, the usual approach is to plan for two data centers and some form of replication (synchronous or asynchronous, depending on the case). The final recommendation comes from balancing risk vs cost.

4. How often should I run load tests on my platform?

A sensible minimum is to run them:

- Before major predictable campaigns (Singles’ Day, Black Friday, Cyber Monday, Black Week, big product launches, etc.).

- After significant architectural changes (new application version, database change, new provider, etc.).

In very dynamic projects, some companies integrate them into their CI/CD cycle on a regular basis (for example monthly or quarterly). The key idea is to avoid letting changes in application and infrastructure pile up for years without checking again how the system behaves under stress.