In any modern infrastructure, backups are no longer a “nice to have”, but a structural component on the same level as storage or networking. When you work with Proxmox VE clusters, HA solutions, and mission-critical workloads, having a backup solution designed specifically for this ecosystem is what makes the difference between a fast recovery and a long, expensive outage.

That’s where Proxmox Backup Server (PBS) comes in: an enterprise backup solution, 100% open source, designed to protect virtual machines, containers, and physical hosts with incremental, deduplicated, encrypted backups, tightly integrated with Proxmox VE.

Table of contents

On Stackscale infrastructure, Proxmox Backup Server can be combined with Archive storage (via NFS or S3-compatible access), available in two separate data center locations in Madrid and Amsterdam, to build robust, multi–data center backup strategies aligned with the 3-2-1 model. It can also be complemented with remote storage on other providers to add extra backup copies and data resilience.

What is Proxmox Backup Server?

Proxmox Backup Server is a Linux distribution focused specifically on backup, based on Debian with native ZFS support, written primarily in Rust and licensed under GNU AGPLv3. Its goal is to offer an open alternative to proprietary backup solutions, without limiting the number of machines or the amount of protected data.

Key features include:

- Incremental, deduplicated backups for VMs, containers, and physical hosts.

- High-performance Zstandard compression.

- Client-side authenticated encryption (AES-256-GCM), so data travels and is stored encrypted even on targets that are not fully trusted.

- Integrity verification using checksums and schedulable verification jobs.

- Native integration with Proxmox VE, including support for QEMU dirty bitmaps for fast incremental backups.

- Support for tape (LTO) and S3-compatible storage as data backends.

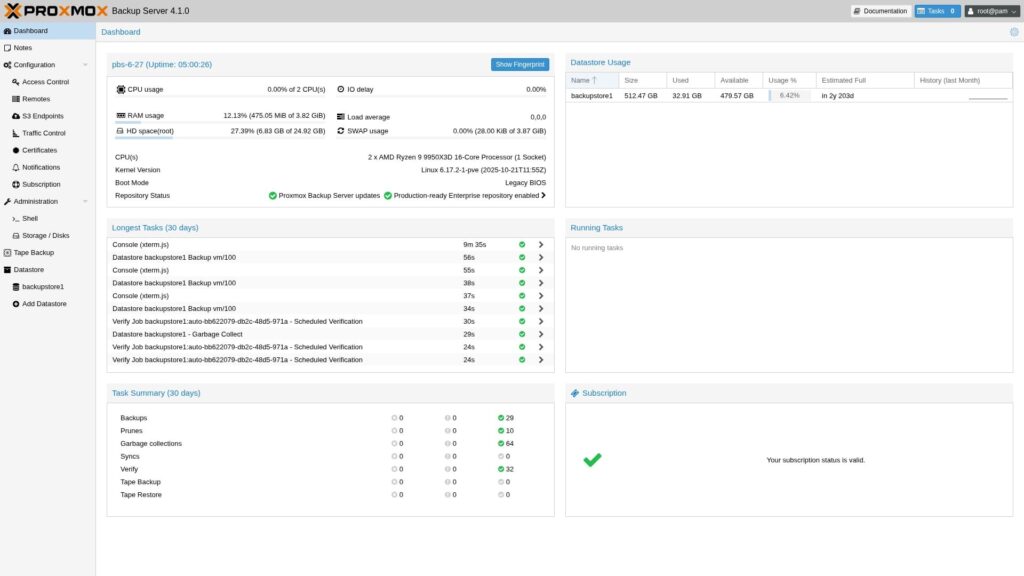

Proxmox Backup Server 4.1, released in November 2025, is based on Debian 13.2 “Trixie”, uses the Linux 6.17.2-1 kernel as the new default, and ZFS 2.3.4. It introduces important improvements in traffic control, verification, and S3-based storage usage.

Architecture and how it works

Client–server model and namespaces

PBS follows a client–server model: one or more backup servers store the data, while clients (including Proxmox VE) send backups incrementally over TLS 1.3. Backups are organized into datastores and namespaces, which makes it possible to separate environments (for example, production, preproduction, or different clusters) while maintaining effective deduplication.

Thanks to deduplication and incremental backups, only the blocks that have changed since the last backup are transferred and stored, drastically reducing network and storage consumption.

Integration with Proxmox VE

In Proxmox VE, you only need to add a PBS datastore as a new backup storage to start using it for VMs and containers. From the Proxmox interface itself you define backup jobs (full, incremental, scheduling, retention) targeting the PBS datastore.

PBS also allows you to restore:

- Full VMs (with live restore support in many cases).

- Full containers.

- Individual files from VM or container backups, directly from the GUI or an interactive shell.

Security, integrity, and ransomware protection

Security is one of the core pillars of Proxmox Backup Server:

- Client-side encryption with AES-256-GCM, so the backup server cannot read the data without the corresponding keys.

- SHA-256 checksums to verify the integrity of all stored blocks.

- Schedulable verification jobs to detect bit rot or corruption.

- A granular role-based access control (RBAC) model with roles, API tokens, integration with LDAP, Active Directory, and OpenID Connect, plus 2FA support.

Starting with version 4.1, PBS also adds user-based traffic control and bandwidth limiting for S3 endpoints, which helps prevent network congestion when running heavy backup or restore operations to object storage.

All of this makes Proxmox Backup Server a very solid component in a broader strategy for defending against ransomware and large-scale infrastructure failures.

Proxmox Backup Server on Stackscale infrastructure

On Stackscale, Proxmox Backup Server can be integrated with different types of storage, but the recommended design is always that the primary backup copy lives on Archive storage, isolated and redundant, and then faster layers are added if you need to accelerate recovery.

The general pattern looks like this:

- Reference copy (golden copy): always on Stackscale Archive storage, accessible via NFS or S3-compatible API, and available across three separate physical locations (2 data centers in Madrid and 1 in Amsterdam).

- Optional performance layers: additional copies on faster network storage from Stackscale (All-Flash) to speed up frequent restores.

- Local disk storage on the backup server: possible, but with clear limitations: access to the backups depends on that specific physical server, and this layer does not provide redundancy.

Archive storage as the primary backend for Proxmox Backup Server

Stackscale’s Archive storage should be the reference backend for Proxmox Backup Server, ensuring that no matter what happens to hosts or clusters, there is always a consistent, recoverable copy in a separate environment:

- Archive mounted via NFS

- PBS sees Archive as just another datastore exported via NFS.

- Ideal for large backup volumes over the network, while keeping PBS’s own deduplication and compression.

- Recommended as the primary destination for medium- and long-term retention.

- Archive via S3-compatible access

- Archive can be exposed as an S3 bucket and configured in PBS as a remote target.

- This allows you to leverage Proxmox Backup Server’s object storage capabilities (including bandwidth limiting and multi–data center scenarios).

- Especially suitable for long-term backups and disaster recovery plans.

In both cases, Archive is available in two regions:

- 2 data centers in Madrid.

- 1 data center in Amsterdam.

This makes it easy to design multi–data center strategies: for example, keeping primary backups in Archive Madrid and periodically syncing them to Archive Amsterdam using PBS remote sync jobs.

Fast network storage as an accelerated recovery layer

On top of Archive, customers can add a faster network storage layer from Stackscale (All-Flash) to:

- Store the most recent backups (for example, from the last few days).

- Speed up frequent restores or recovery tests.

- Reduce RTO for services that are extremely sensitive to downtime.

In this model, Archive remains the single source of truth, and the network storage acts as a hot cache for the most common restores.

Local disk: a situational option, but use with care

Local storage on the Proxmox Backup Server host itself can still be used in some scenarios (for example, as an initial backup buffer), but it comes with several drawbacks:

- Access to the backups depends on the health of that specific physical server.

- There is no intrinsic redundancy at this layer unless data is replicated to Archive or network storage.

- In the event of a serious host or local storage failure, the most valuable backup layer could be lost.

That’s why, in professional architectures on Stackscale, the recommended approach is:

- Stackscale Archive as the mandatory destination for critical backups (NFS or S3).

- Fast network storage to accelerate restores.

- Local disk only as a temporary or complementary layer, never as the only backup repository.

Example architectures with PBS and Archive on Stackscale

1. Proxmox private cloud with Archive as primary repository and a fast recovery layer

- Proxmox VE cluster in Madrid.

- Proxmox Backup Server deployed as a VM or bare-metal server on Stackscale.

- Primary PBS datastore hosted on Stackscale Archive storage (in Madrid), accessible via NFS or S3-compatible access.

- Optionally, an additional datastore on fast network storage (SSD/NVMe over network) to speed up frequent restores.

In this design, backup jobs always write to Archive, which acts as the golden copy.

Optionally, you can define additional jobs or internal PBS syncs towards the fast datastore to maintain a “hot” layer for faster restores of specific machines.

To comply with a 3-2-1 and multi–data center strategy, a common pattern is:

- Keep the primary datastore in Archive Madrid.

- Periodically sync that datastore to Archive Amsterdam using PBS remote sync jobs.

This way, even if a full data center fails, the organization still has recoverable copies in another region (Madrid and Amsterdam).

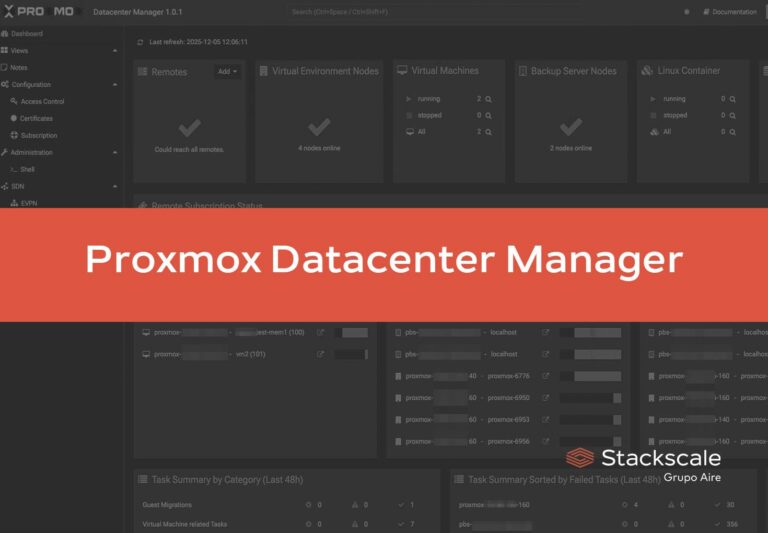

2. Multi-cluster platforms with namespaces and Archive as the single source of truth

- Several dedicated Proxmox clusters (production, preproduction, QA, customer environments, etc.) across different Stackscale data centers.

- One or more central Proxmox Backup Server instances, with datastores backed by Archive and namespaces separated by environment or internal customer.

- Optionally, additional datastores on faster network storage for environments that require very low RTO (for example, production).

In this model:

- All environments write their backups to Archive, organized into namespaces (for example:

prod/,preprod/,qa/,customer-X/). - Namespaces provide logical isolation and allow different retention policies and permissions while still benefiting from global datastore deduplication.

- For certain namespaces (like production), you can add a “fast” datastore that acts as an accelerated recovery layer, but always backed by Archive as the long-term, multi–data center repository.

In this way, the organization combines:

- Governance and segregation (namespaces + RBAC).

- Robust, isolated copies on Archive.

- And, where needed, very fast restores from higher-performance network storage.

3. Backups of physical hosts and specific services with Archive as the primary target

Proxmox Backup Server is not limited to VMs and containers: the backup client can be installed on physical Linux servers to back up files or volumes.

On Stackscale, this makes it possible to:

- Protect specific appliances (for example, physical database servers, legacy application nodes, or specialized servers).

- Send those backups directly to Stackscale Archive storage, using NFS or S3-compatible access as the main PBS backend.

By placing these backups on Archive:

- You decouple the backup from the physical hardware itself (which is often a single point of failure).

- You gain multi–data center redundancy by replicating PBS datastores between the two Archive locations in Madrid and Archive Amsterdam.

- Long-term retention becomes more cost-efficient on a per-GB basis, while keeping the option to restore in another region if needed.

If you need to speed up certain restores (for example, for regular DR drills or frequent incidents on a critical physical server), you can add an additional datastore on fast network storage—but always under the assumption that the “definitive” copy lives on Archive.

Best practices when designing the solution

When combining Proxmox Backup Server with Archive on Stackscale, it’s recommended to:

- Define a clear retention policy (daily, weekly, monthly) using PBS pruning options and the prune simulator to visualize its long-term effect.

- Separate operational backups (fast restores) from archival backups (long-term retention) into different datastores or namespaces.

- Take advantage of per-user traffic control and S3 bandwidth limiting so backup windows don’t saturate the network or impact production services.

- Integrate PBS with the corporate identity system (LDAP/AD/OIDC) and enable 2FA for administrative logins.

Quick summary for administrators

- What it is

Proxmox Backup Server is an enterprise, open source backup solution based on Debian and written in Rust, with native ZFS support, deduplication, Zstandard compression, and client-side authenticated encryption. - What it’s for

Protecting VMs, containers, and physical hosts with fast, efficient incremental backups, natively integrated with Proxmox VE and with support for tape and S3-compatible storage. - Key new features in 4.1

Based on Debian 13.2 “Trixie”, Linux kernel 6.17, ZFS 2.3.4, per-user traffic control, configurable parallelism for verification jobs, and bandwidth limiting for S3 endpoints. - How it fits into Stackscale

It can be deployed as a VM or bare-metal node inside a Proxmox private cloud, using Stackscale’s Archive storage as an NFS or S3-compatible backend, available in Madrid and Amsterdam, to build multi–data center backup architectures with fast local restores and long-term off-site copies.

If you’re already considering Proxmox as a virtualization alternative, or you want to strengthen your backup strategy on Proxmox infrastructures running on Stackscale, Proxmox Backup Server + Archive is a very solid combination to gain resilience without giving up the open source model or sovereignty over your data.