Energy efficiency is essential for building sustainable solutions and ensuring long-term sustainable growth. Maximizing the benefits of technology while reducing its impact on the planet is one of the main challenges of data centers nowadays.

Data centers require a vast amount of energy to keep running. Therefore, energy efficiency is a priority, not only in terms of sustainability, but also in terms of costs — especially considering energy bills have rocketed over time.

Data center power management

There is no doubt that data center power management is one of the most complex challenges in the industry. There are many methods data centers can implement to increase energy efficiency, and new systems also continue to enter the market. Besides, aspects such as locating data centers in cooler climates can also help to considerably reduce energy consumption and costs.

That is why large, specialized data centers are constantly working on improving their energy efficiency. For doing so, they implement all kinds of innovative techniques in order to reduce their PUE.

What is the data center PUE?

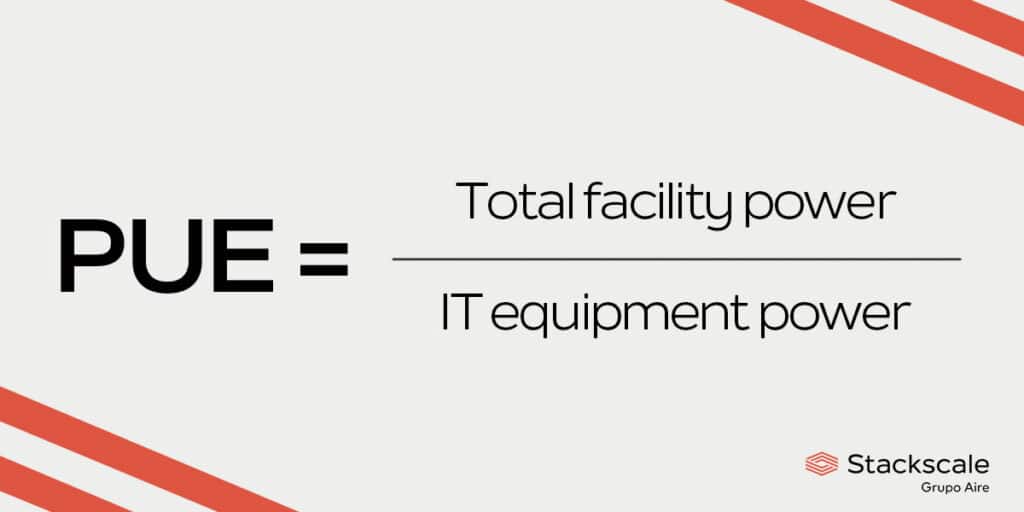

PUE, acronym for Power Usage Effectiveness, is the value derived from dividing the total amount of energy used by a data center facility by the energy delivered to the data center’s computing equipment. Elements such as lighting or cooling fall into the category of energy used by a data center facility.

PUE = Total facility power / IT equipment power

The PUE ratio indicates how efficiently a data center uses energy and, more precisely, how much energy the computing equipment uses. An ideal value would be a PUE of 1.0.

This method was originally developed by The Green Grid, a non-profit IT organization, in 2007. As any other method, it has both positive and negative features. But, all in all, it is the most popular and the most effective method to measure efficiency in data centers nowadays. It was published as a global standard under ISO/IEC 30134-2:2016 and as a European standard under EN 50600-4-2:2016, in 2016.

Average data center PUE globally

According to the Uptime Institute Global Data Center Survey 2023, the average annualized PUE globally was 1.58. In fact, it is worth noting that the average PUE globally has been stalled since 2018, between 1.55 and 1.60 — with a slight increase up to 1.67 in 2019.

Why is it so?

One of the reasons for this stagnation is probably the fact that the survey has expanded its reach into additional territories. While it used to be dominated by North American and European data centers, it now offers a more global overview. In this regard, aspects such as more demanding cooling systems in warmer climates contribute to this trend. In warmer and more humid climates such as the Middle East and Latin America, the average PUE is above 1.70.

Nevertheless, there are additional causes that prevent the global average PUE to continue decreasing, such as the high costs and technical complexity of improving efficiency in old and smaller data centers. According to the survey, data centers built during the past five years are more efficient. As an example, new constructions in North America and Europe hold a PUE ratio below 1.40.

Data centers energy efficiency measures

When compared to traditional on-premise data centers, large data centers have a positive effect on power consumption for computing; since the utilization of physical hardware is much more optimized. However, as the need for faster and more complex computing services increases, so does the need to reduce the data centers’ footprint.

Let’s see some of the most common energy efficiency measures data centers can (and do) put in place to reduce their power consumption. As well as to ensure a resilient and efficient service.

Free cooling

Data center operators can save a great amount of energy by optimizing cooling systems. On this matter, one of the most popular, efficient systems nowadays is “free cooling”. The free cooling method consists of using low outdoor temperatures to cool data center facilities. Its adoption has quickly grown thanks to its numerous benefits in terms of cost and energy efficiency.

Cooling systems in data centers can account for almost a half of the whole electricity use.

How does free cooling exactly work?

The free cooling system turns on when outdoor temperatures are lower than the indoor temperature achieved by the air conditioning system. So, when dropping to the set temperature, the free cooling system starts working. Then, a modulating valve allows all or part of the water to go through the free cooling system; instead of going through the air conditioning system’s chiller.

Free cooling can either be direct or indirect:

- Direct free cooling. The air flow pushes cold outdoor air inside the data center and warm indoor air outside. However, as simple as it might sound, outdoor air must be filtered and adjusted to the datacenter’s temperature and humidity parameters.

- Indirect free cooling. One or two heat exchangers are used to transport outside air. Single-stage indirect free cooling systems convey indoor air through an air/air heat exchanger. Two-stage indirect free cooling systems use a heat exchanger to transfer heat into a liquid and a second one to convey air to the outside.

Data centers save a lot of energy by using a natural cooling source for air conditioning, instead of an electrical chiller. This is especially important considering that cooling systems can account for almost a half of the whole data center energy consumption.

Cooling and heat management

Apart from free cooling, there are many other measures for achieving a more sustainable cooling and heat management in data centers. Here are some examples:

- Cold air and hot air containment. Implementing physical barriers to avoid that cold air in supply aisles mix with hot air in exhaust aisles.

- Natural refrigerants. Using natural refrigerants for cooling; instead of hydrochlorofluorocarbon (HCFC) and hydrofluorocarbon (HFC). Since, unlike HCFC and HFC refrigerants, natural refrigerants such as water (R718) or ammonia (R717) do not deplete the ozone layer.

- Direct Liquid Cooling (DLC). It is a cooling method that uses liquids, instead of air, to remove heat on IT equipment. This significantly reduces the dependence on air conditioning systems. Although free cooling is still predominant, DLC is expected to grow over the coming years, according to Uptime Intelligence.

- Higher cooling temperature setpoint. Raising temperature setpoints in data centers lowers power consumption and extends the cooling systems’ lifespan.

- Heat recovery and recycling. Reusing data centers’ excess heat to warm nearby households, offices and schools can significantly reduce emissions. Heat recovery is a smart way of minimizing energy waste and optimizing energy consumption. Especially when taking into account that data centers generate a huge amount of excess heat from all the equipment and systems they host.

- Cooling system modernization. Simply by updating cooling systems, data centers can considerably improve energy efficiency. As an example, Interxion updated its cooling systems in Madrid’s data center MAD1 and increased the free cooling hours from 2,700 to 7,700 hours per year.

Artificial Intelligence and Machine Learning

The use of Artificial Intelligence (AI) in data centers can also bring considerable benefits in terms of performance, costs and efficiency. Large data centers already use AI and Machine Learning (ML) for identifying new efficiency improvements. Data centers use deep learning and artificial neural networks for:

- optimizing cooling systems,

- predicting power use,

- and improving PUE ratios and data center management in general.

The potential to improve and optimize data center management by applying AI is huge. Data centers already use AI for improving many other aspects, such as: analyzing risks, forecasting demand, improving equipment maintenance or optimizing data centers’ design and operations. Besides, AI can even be used to find solutions to more complex issues, such as better integrating data centers in cities.

Renewable energies

Regarding energy consumption, using 100% clean and renewable energies is also a priority among major data centers. During the past years, they have been working hard on reducing their reliance on fossil fuels. As well as they have invested a lot of resources in increasing efficiency and reducing their environmental footprint.

That is one of the reasons why we rely on high-quality, specialized data centers; deeply committed to deliver the best performance, security and efficiency balance.

To sum up, data centers can implement numerous measures to increase their energy efficiency. Even though there is always room for improvement, new innovative measures are constantly appearing for boosting efficiency and reducing energy consumption in data centers.

Sources: Uptime Institute and Uptime Intelligence.